Terraform Data Toggles

The Problem

Terraform modules allow you to reuse code and treat large swaths of interlocking infrastructure as black boxes. They are configurable, composable, and, if designed right, flexible.

Recently, while working on a Terraform module, I ran into an interesting issue regarding flexibility.

Suppose we have a generic module for spinning up scheduled lambdas that provisions a lambda function, EventBridge scheduled triggers, execution and function roles, etc. with our lambda code stored in S3.

Ideally, the module should know how to provision the S3 bucket with the correct configuration. However, it wouldn’t be unexpected for a the user to want to use this module many times, and not want a new S3 bucket for each invocation.

Stating the problem generically:

A module should know how to provision everything required to create a functional unit, however, for certain components, the module should also accommodate the caller bringing their own resource.

The Pattern

The solution is a data toggle. It conditionally creates resources internally, or accepts preexisting, user-provided resources.

Data Toggles in Action

A data toggle can be simple to implement. Add a boolean for the relevant resource that indicates whether or not the module should create that particular resource. In our example above with the S3 bucket:

variable "bucket_name" {

type = string

description = "The bucket name in which this module's data should be added. Must be globally unique."

}

variable "create_bucket" {

type = bool

description = "Whether or not this module should create the S3 bucket in which the lambdas will be stored."

default = false

}

Then in your main code, set the resource to be conditional with count, and use a conditional data source to act as a broker for either case, like so:

resource "aws_s3_bucket" "target" {

count = var.create_bucket ? 1 : 0

bucket = var.bucket_name

}

data "aws_s3_bucket" "target" {

bucket = var.create_bucket ? aws_s3_bucket.target[0].id : var.bucket_name

}

The interesting part of this solution is the data source. From the Terraform documentation:

Terraform defers reading data resources1 in the following situations:

- At least one of the given arguments is a managed resource attribute or other value that Terraform cannot predict until the apply step.

- The data resource depends directly on a managed resource that itself has planned changes in the current plan.

- The data resource has custom conditions and it depends directly or indirectly on a managed resource that itself has planned changes in the current plan.

It is this third item that we are exploiting.

If create_bucket is set to true, then the module creates the aws_s3_bucket resource, and the data source is read after the bucket exists and has no pending changes in the current plan.

If create_bucket is set to false, then aws_s3_bucket is not created, the data source is read immediately, and execution stops if the bucket cannot be found.

The data source now represents both the externally created resource and the internally created one, whichever one is toggled. Any reference to the bucket can be performed solely through the data source as an interface.

This is a happy bonus. Because of it, all references to the data toggled object can now be simplified. Where otherwise we might have written:

s3_bucket = var.create_bucket ? var.bucket_name : data.aws_s3_bucket.target.id

We now only have to write the following:

s3_bucket = data.aws_s3_bucket.target.id

The ternary operator that we’re replacing is now captured in the data source itself, and does not need to be repeated.

Variations

The first place to consider variations on this pattern is with the boolean default value. The module maintainer should have a good sense of when users will bring preexisting resources more often than not.

KMS keys, for example, are frequently used more broadly than a single solution. Keys will be used to encrypt an entire class of objects, such as those of a whole application stack, i.e. the snapshots, at-rest data, etc. for each of compute, database, and cache. For this reason, you might consider toggling the default to false. Whereas an S3 bucket or an ACM certificate would often be created by default, depending on the module usecase.

There is a clear gray area here, but it is worthy of consideration, as this selection will be pseudo permanent to maintain backwards compatibility.

In the example from the first section, the module doesn’t even need the boolean if the module knows how to create a unique bucket name per module instance.

# variables.tf

variable "bucket_name" {

type = string

description = "The bucket name in which this module's data should be added. Must be globally unique."

default = ""

}

# main.tf

resource "random_string" "target" {

length = 8

special = false

upper = false

}

resource "aws_s3_bucket" "target" {

count = var.bucket_name == "" ? 1 : 0

bucket = "relevant-name-${random_string.target.id}"

}

data "aws_s3_bucket" "target" {

bucket = var.bucket_name == "" ? aws_s3_bucket.target[0].id : var.bucket_name

}

Note that the above example only works for resources that must exist for the solution to function.

Consider the case where a solution uses a KMS key. There are three distinct situations: the user provides the key, the module generates the key, or the solution is unencrypted. This requires the boolean and some additional logic.

# variables.tf

variable "key_id" {

type = string

description = "The name for an existing KMS key."

default = ""

}

variable "create_kms_key" {

type = bool

description = "Whether or not this module should create the KMS key."

default = false

}

# main.tf

locals {

# Handle the compound logic in a local variable to determine if encryption is in use.

# This will make the key easier to reference.

key_in_use = var.key_id != "" || var.create_key

}

resource "aws_kms_key" "this" {

count = var.create_key ? 1 : 0

description = "KMS key for encrypting relevant parts of this module."

}

data "aws_kms_key" "this" {

# This toggle is now conditional for the case where no encryption is used

count = local.key_in_use ? 1 : 0

key_id = var.create_kms_key ? aws_kms_key.this[0].id : var.key_id

}

The second to last line above could be written like this:

key_id = var.key_id != "" ? var.key_id : aws_kms_key.this[0].id

This swaps which variable takes precedence in the case where both the key_id and create_kms_key variables are set, with the former prioritizing create_kms_key, and the line above prioritizing key_id.

Finally, to reference the toggle, make sure to null out the key for the case where no encryption is used.

# elsewhere in main.tf

kms_key = local.key_in_use ? data.aws_kms_key.this[0].id : null

Example

Create Bucket with the Module

Let’s prove the concept out. I’ve written a module based off the initial example in this article. It toggles the creation of an S3 bucket and places a simple text file into the bucket. The user can provide a bucket name and have it created for them, or allow the module to create it. We are going to let the module create the bucket first, then remove the bucket from the state file and pass it into the module as if we created it. We will toggle the creation mechanism in each case.

You can check out the source on GitHub, but the module is in my Terraform Cloud module registry and can be referenced directly.

module "data_toggle_demo" {

source = "app.terraform.io/xjables/data-toggle-demo/aws"

version = "0.0.2"

bucket_name = <provide a globally unique bucket name>

create_bucket = true

}

Make sure to setup a default provider before applying. This module uses the new bucket resource from the AWS v4.0 S3 refactor, so your provider will have to be “>= 4.0.0”.

I would like to draw your attention to the following two lines in the plan:

# module.toggle_test.data.aws_s3_bucket.target will be read during apply

# module.toggle_test.aws_s3_bucket.target[0] will be created

The second item is the bucket being created by the module as expected.

The first item is the data source that points to it. Data sources show up in the plan only when they will be resolved in the apply phase. As mentioned above, this happens when the data source references an object that changes in this plan. Since the bucket it references is being created, the data source is read during the apply phase, only after the bucket creation occurs. If we ran a second plan after this apply, assuming we made no manual changes, the data source would not show up in the plan as the bucket it references would not experience changes.

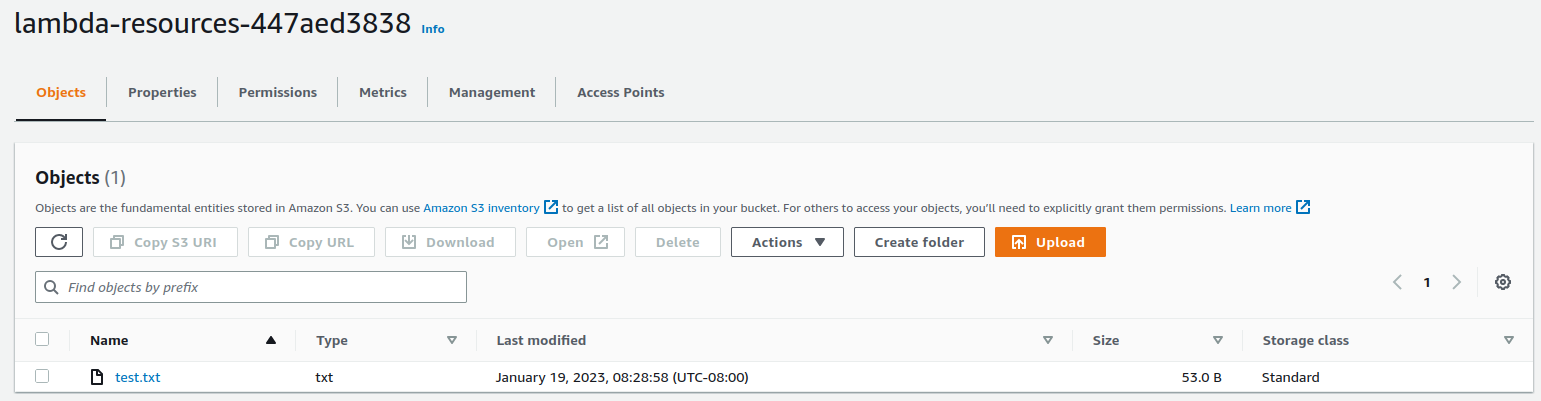

After apply, you should see your bucket is created with a text file uploaded to it.

Use a Preexisting Bucket

Let’s now use the bucket we just created as a preexisting resource, and toggle the module to accept it. Delete the test object from the bucket and remove the state file and its backup.

Invoke the module with the create_bucket variable removed (it defaults to false).

module "data_toggle_demo" {

source = "app.terraform.io/xjables/data-toggle-demo/aws"

version = "0.0.2"

bucket_name = <globally unique bucket name>

}

Our plan has simplified.

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with

the following symbols:

+ create

Terraform will perform the following actions:

# module.toggle_test.aws_s3_object.test will be created

+ resource "aws_s3_object" "test" {

+ acl = "private"

+ bucket = "lambda-resources-447aed3838"

+ bucket_key_enabled = (known after apply)

+ content_type = (known after apply)

+ etag = (known after apply)

+ force_destroy = false

+ id = (known after apply)

+ key = "/test.txt"

+ kms_key_id = (known after apply)

+ server_side_encryption = (known after apply)

+ source = "terraform-aws-data-toggle-demo/test.txt"

+ storage_class = (known after apply)

+ tags_all = (known after apply)

+ version_id = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

As expected, the data source is now read before the plan phase because it references the bucket name string itself, and not an object internal to the module that is changing during this plan.

Applying will push the test.txt file to your preexisting bucket.

Interesting Side Note

Notice in the steps above we re-used the bucket that was initially created by module. In a broader sense, we refactored the toggled object to be sourced from outside of the module. This was made easier by the limited number of resources in the module. Refactoring a larger module, you likely don’t want to remove all of the resources from the state file by deleting it. In that case, you can remove the resource individually from the state.

$ terraform state rm module.data_toggle_demo.aws_s3_bucket.target[0]

Then toggle the module to not create the bucket as we did before. There may be more than one resource to remove, so consider this with caution.

This refactor ability of toggled objects is handy, particularly for composibility in the case where we have a module that may or may not be invoked many times. If we only have need for one instance of the module, let the module handle the togglable, common object. If the usecase changes and you need to subdivide the workload to many instances of that module, refactor the common resource out into your root module, and toggle all modules instances not to create the common resource.

When to Use

Like any design pattern, usage needs to serve the solution of the problem and not its own novelty. Data toggles do not replace good design practices. If you find your modules is getting too complex, the solution is not to make half of it toggleable, the solution is to refactor it into multiple composable modules that the user can piecemeal together.

The best candidates for data toggles are small subsets of resources that are often referenced multiple times from many composed pieces of architecture. These might include S3 buckets, KMS keys, EC2 key pairs, ACM certificates, etc. Often, global resources fit this category well. Many enterprise infrastructures divide out their large scale global resource generation into a separate module.

Notice that any resource you are trying to toggle requires you to write variables in your module to accommodate the creation of that object from scratch. So if you wanted to toggle something complicated like a CloudFront distribution, you would need to include variables for all of the CloudFront parameters you wanted the end user to be able to set. For this reason, data toggles should not be used for complicated objects. Instead use them for simple objects that users are already likely to have at their disposal before deploying the module.

-

sic: Terraform used to refer to data sources as data resources, hence the reference to the old name here. ↩︎